So you want to change the underlying CNI provider in your kubernetes cluster? Well in some cases it’s as simple as running a few YAML manifests, in others, you have to do the hard work yourself. For instance, going from Flannel to Calico is almost trivial. But to go from Calico to Flannel you will have to do most of the work manually.

This is not a common task in daily kubernetes administration. It’s much more likely that you will simply provision a fresh cluster and retire the old one. But it’s nice little deep dive into kubernetes networking. As such it will probably showcase the CNI abstraction in a different light. Also, there might be some obscure edge cases that force you to do this kind of migration. In my case, it was simply that Calico was not playing nice with a bunch of multus-installed network interfaces that were required for connecting Kubevirt VMs to physical network infrastructure.

What are we doing here?

We are replacing the main CNI plugin from Calico to Flannel. We are changing the main Container Network Interface which handles allocation and clean-up of network interfaces. It’s an abstraction through which each pod gets connected to every other pod, and everything gets its own unique network endpoint (eg. IP address). CNIs can do way more, but this is the bare minimum required to have a functioning k8s cluster.

So to replace one CNI with another we simply need to install one, delete and clean up the other. Tell kubernetes what the new network range will be --cluster-cidr=10.244.0.0/16 and repeat with all master nodes, followed with all worker nodes (also you can just provision new workers and delete old ones – assuming your stateful workloads are not dependent on their host nodes).

Still with me?

The critical thing here is to clean up behind calico properly because otherwise, you might end up with weird non-deterministic behavior in the networking stack. Think of pods occasionally sending traffic to the wrong pod, losing connectivity, or terrible performance. For this reason, the move from Calico to Flannel needs to be properly planned and executed. Also, it might help to understand how underlying containers work.

You need to understand how both calico and flannel work, as well as understand and apply a few commands. Now you’re gonna get an explanation first and then the code. This way you have to go out of your way to copy random snippets of the code from some guy you found on the internet. If you fully understand what these snippets do, good job. If you don’t, NO! Bad Operator! I warn you: Krampus will come and fill your stocking with burnt SSDs!

How Calico works

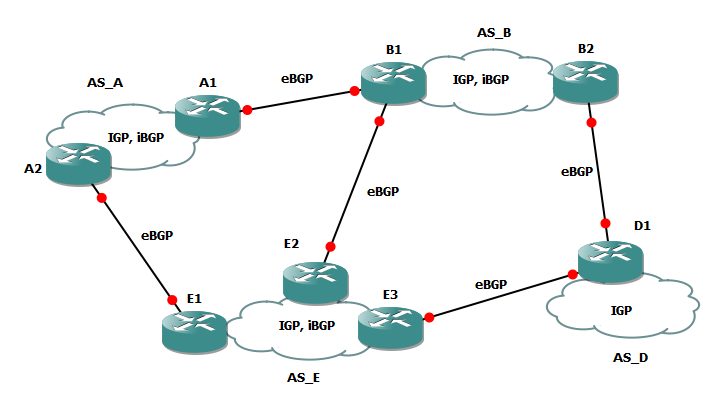

Calico is an L3 network that uses BGP protocol to build routing tables between agent nodes. With each k8s node having a Calico agent that acts as a BGP node. BGP or Border Gateway Protocol works by having all peers share information between themselves. When they encounter a packet that cannot be locally served gets past the border through the gateway to another peer. The peer choice is based on the data shared between peers.

In a local, or internal BGP network this is in most cases the node you want. In external BGP it’s the best guess as to what will bring your packet closer to the destination. Because of the way each peer keeps track of connected peers this network can very easily scale to global proportions. It’s literally the backbone of all long-distance internet traffic. Check this article if you want to know more about how this works for calico CNI.

But for the purposes of this article, Calico acts as a BGP router. Also on the node, calico creates IP routes and IP links. These routes and links point to every pod’s IP address assigned by Calcio CNI. So our cleanup should be as simple as deleting Calico, as well as all routes and links created by Calico. To see what Calico created run the following commands:

ip link list | grep -i cali # Get all IP links starting with cali

iptables-save | grep -i cali # Get all iptables rules starting with cali

ip route | grep -i cali # Get all IP routes starting with cali

How Flannel works

Flannel on the other hand uses VXLAN to create an L3 network fabric between agents. It runs a small agent flanneld on every node. Every node gets its own subnet (by default 10.244.<<node_unique>>.0/24) and every pod gets a unique IP. This means that we can have up to 254 pods per node before we run out of IPs (check subnet masks if this sounds confusing). On every node, we have a bridge called cni0 to which all pods hosted on that node are connected. So local communication is simply Ethernet frame for 10.244.1.42, all pods see it and the one is one of them cares they accept it. This is great for performance but it also stops flannel from implementing advanced CNI features such as defining network policy and pod isolation.

To enable cross-node traffic, flannel encapsulates packets inside a VXLAN UDP packet. VXLAN takes an L2 Ethernet frame (IP to IP communication) and encapsulates it in an L4 UDP datagram (packet). That datagram is then sent through a VTEP device to the desired destination. Between hosts, we have VTEP devices which are physical or virtual switches that route all egress traffic to other VTEP devices in the same network. This sounds quite complicated, but it’s actually relatively simple and focused. Flannel lacks advanced network policy features and encryption but it has generally the best performance and lowest resource utilization among all CNI providers.

In case you want to learn more about these virtual devices, check out this RedHat introduction. Now that we have seen a bunch of lovely acronyms and fancy network names, we all deserve a nice cat joke. If you don’t understand the joke, you have to reread the Flannel/VXLAN section above 🙂

How to go from Calico to Flannel

Now we can start fully deleting calico from our cluster and installing flannel. As we were mentioning earlier calico runs some binaries and also configures a large part of its network through the IP and iptables Linux utilities. So we have to delete the in-cluster resources and clean up every node. After that, we can install Flannel or another CNI of our choice.

BACKUP EVERYTHING BEFORE STARTING

Any mishap in steps below can leave the cluster in a bricked state! Ideally snapshot the master nodes trough VM/cloud provider before playing around thease things.

Deleting Calico cluster resources

This is more or less identical to how we installed Calico in the first place, except that we use the keyword delete instead of applying. DO NOT COPY-PASTE! Ideally, you have documented the exact way you set up your cluster, if not you will have to find your calico version and then find the YAML related to your Calico installation. But the general process goes something like this:

- You download or find the calico .yaml you used to install calico on your cluster:

curl https://docs.projectcalico.org/manifests/calico.yaml -O

- You delete it from the cluster using

kubectl delete -f calico.yaml.

If you are unable to find the appropriate install manifest you have to delete k8s resources manually. You can try looking for all things in your cluster and deleting all calico-related resources you find. You can run commands similar to:

Get all resources with calico in the name:kubectl get all --all-namespaces | grep calico- Get all namespaced api resources:

kubectl api-resources --verbs=list --namespaced -o name | grep calico - Get all non-namespaced api resources:

kubectl api-resources --verbs=list -o name | grep calico

The reason for the two api-resource commands is that calico might create custom resources that may or may not have namespaces. And usually, you need two commands to create them.

Installing flannel:

To install flannel just follow the official install guide. It should be painless enough. Next, modify Kubernetes’s Pod network range by replacing the Calco network CIDR with the one used by flannel. in /etc/kubernetes/manifests/kube-controller-manager.yaml change the line starting with --cluster-cidr from: -pod-network-cidr=192.168. 0.0/24 to --cluster-cidr=10.244.0.0/16, restart kubelet and repeat on all master nodes.

Cleaning up k8s nodes after the Calico to Flannel move

You have to repeat the following steps on every k8s node. Alternatively, you can create new nodes and just cordon, drain and delete old nodes. This way you don’t have to deal with per-node cleanup but depending on your setup and/or interests the manual way below might be more appropriate:

- clear ip route:

ip route flush proto bird - remove all calico links in all nodes

ip link list | grep cali | awk '{print $2}' | cut -c 1-15 | xargs -I {} ip link delete {} - delete ipip kernel module

modprobe -r ipip remove calico configsrm /etc/cni/net.d/10-calico.conflist && rm /etc/cni/net.d/calico-kubeconfigOR temorarly remove them (in case you want a painless rollback)mv/etc/cni/net.d/10-calico.conflist /tmp && mv /etc/cni/net.d/calico-kubeconfig /tmp

in case you used network policy with calico you also need to flush and delete iptables:

iptables-save | grep -i cali | iptables -Fiptables-save | grep -i cali | iptables -X

Finally, you restart the node’s kubelet and wait for the master to mark the node as ready service kubelet restart. This command is Debian flavored so it might be a bit different in other Linux distros.

Recreating all pods

The last thing you need to do (unless you used the delete old & create new nodes) is to recreate all pods in order for them to grab the new network configuration. You need to make sure that your workloads are safe for this sort of recreation. Also, anything not created through a replication controller of some sort (eg just plain pods) will just get deleted, so Caveat emptor! Anyway, lone pods have no place in production clusters 🙂 Then simply delete all pods in all namespaces using: kubectl delete pods –all --all-namespaces, wait for it to finish. And Whola! You got yourself a shiny cluster with a brand new CNI plugin.

Extra reading:

- https://stackoverflow.com/questions/60176343/how-to-make-the-pod-cidr-range-larger-in-kubernetes-cluster-deployed-with-kubead

- https://stackoverflow.com/questions/60176343/how-to-make-the-pod-cidr-range-larger-in-kubernetes-cluster-deployed-with-kubead

- https://github.com/flannel-io/flannel

- https://gist.github.com/rkaramandi/44c7cea91501e735ea99e356e9ae7883

- https://blog.laputa.io/kubernetes-flannel-networking-6a1cb1f8ec7c

- https://www.ibm.com/docs/en/cloud-private/3.1.2?topic=ins-calico

- https://stackoverflow.com/questions/53610641/how-to-delete-remove-calico-cni-from-my-kubernetes-cluster

- Flannel official install guide

- Technical debt from the business perspective

- Technical debt from the developer perspective

- Sysadmin Technical debt